The rapid adoption of Generative AI (GenAI) has transformed how organizations operate, but it also casts a growing shadow over enterprise security. Yes, these tools bring productivity gains, however their ability to access and extract sensitive data introduces new shadow AI risks for businesses.

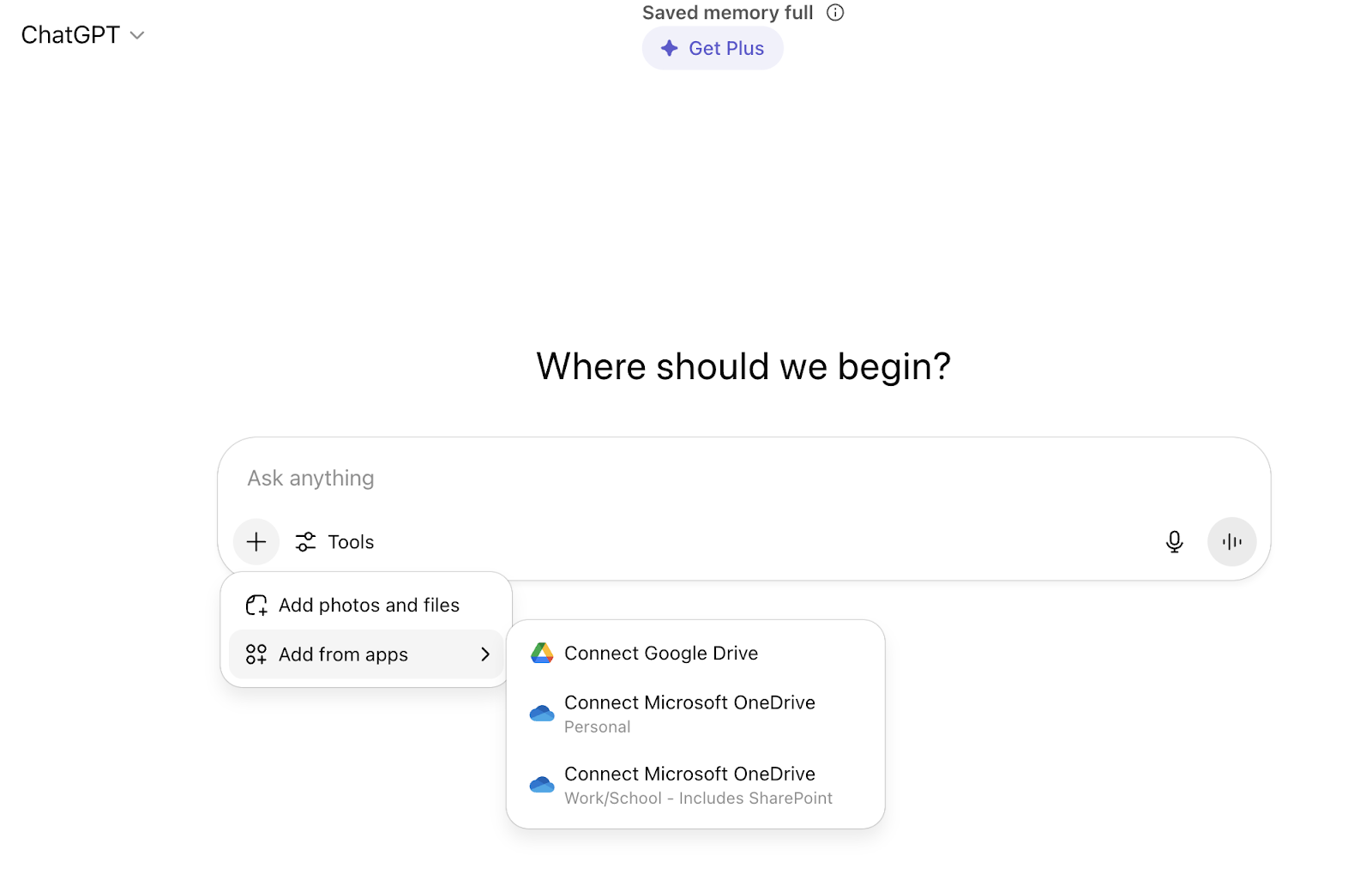

Recently, ChatGPT introduced meeting recording and new direct connectors to cloud storage providers like Google Drive, Box, SharePoint, and OneDrive, for their business users. These integrations allow ChatGPT to query information across users’ own services to answer prompts. It also makes it easier to leak sensitive data by streamlining how users share private information with GenAI models.

For security teams, the challenge isn’t just about preventing data leaks. It’s about learning how to identify and mitigate shadow AI risks introduced by unsanctioned GenAI apps in the workplace.

The Shadow AI Risks: How ChatGPT Introduces Shadow AI Security Risks

OpenAI's latest updates to ChatGPT are designed to enhance professional workflows. Business users can now:

- Record and Transcribe Meetings: ChatGPT can capture audio, transcribe it, summarize key points, and even generate action items.

- Connect to Cloud Drives: Direct integrations allow ChatGPT to search, analyze, and retrieve information from documents and files stored within these repositories.

These features, if not properly enforced or approved by admins, dramatically expand the attack surface for data exposure. Especially when employees connect their business accounts with shadow SaaS that contain corporate data sources, like a personal Dropbox. This creates a critical "shadow AI" problem if there are no policies in place:

- Unsanctioned Data Flow: Corporate data from confidential meeting discussions or proprietary documents can now flow directly into a third-party AI service, possibly bypassing established security policies and controls.

- Ease of Integration, High Risk: The simplicity of connecting ChatGPT accounts to corporate cloud drives means that sensitive information can be pulled into the AI model with just a few clicks, often without the user fully understanding the implications.

- Hidden Data Copies: Meeting recordings and synced documents create new, potentially unmanaged copies of corporate data within a third-party application, complicating data governance and retention efforts.

- Legal and Compliance Headaches: The presence of corporate data in unauthorized AI services can lead to severe compliance violations and significant challenges in legal discovery processes, particularly during audits or M&A activities.

The Challenge: How to Identify and Mitigate Shadow AI Risks

The insidious nature of shadow AI lies in its speed and stealth. Traditional security tools often lack visibility into these user-driven integrations. Employees, trying to be productive, might unknowingly introduce risks by:

- Using Personal Accounts: Security teams struggle to monitor data flows from individual, unsanctioned ChatGPT or other GenAI accounts.

- Bypassing Network Controls: Direct AI-to-SaaS connectors operate outside the traditional network perimeter, making them difficult to detect with conventional security measures.

- Volume and Velocity: The sheer volume of data and the speed at which it can be integrated make manual detection and remediation impossible.

The Solution: Obsidian Security Detects Shadow AI Applications and Mitigates Risk

This escalating risk demands a modern, comprehensive approach to SaaS security. Obsidian Security provides the critical visibility and control needed to manage the evolving threat landscape of shadow AI and SaaS misconfigurations.

Obsidian's platform offers:

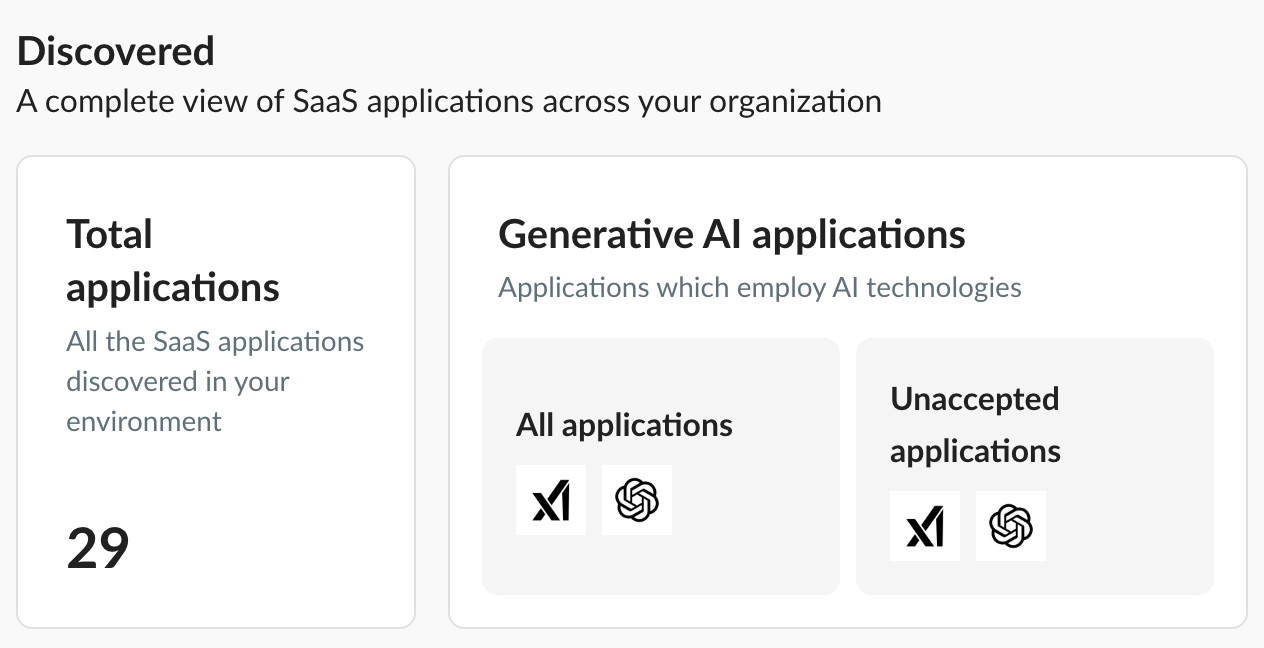

- Comprehensive Discovery: Obsidian automatically discovers all SaaS applications in use across your organization, including unauthorized GenAI tools like ChatGPT. This includes discovery through methods like the Obsidian Browser Extension that provide deep insights into user interactions with SaaS applications.

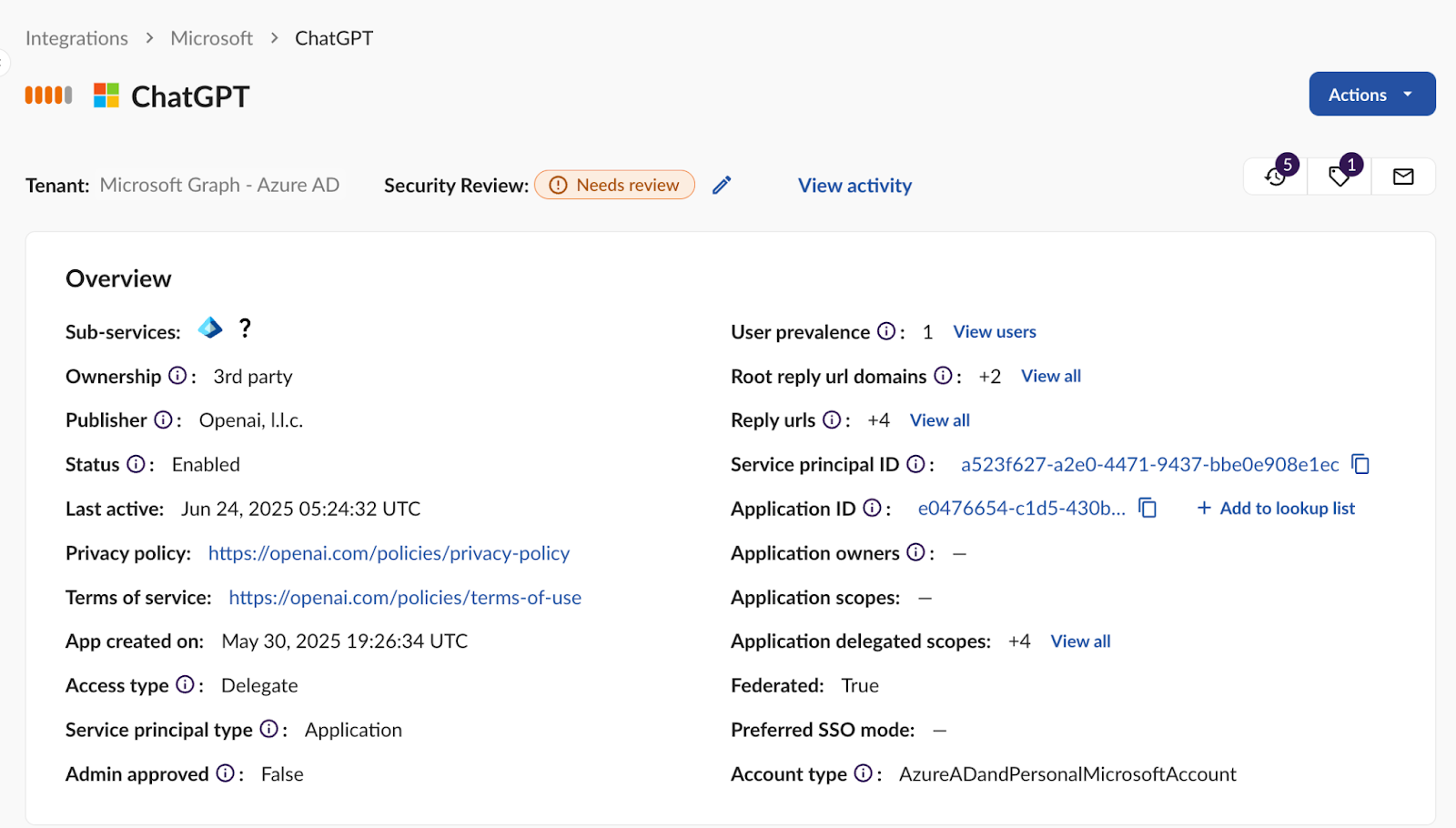

- Granular Posture Controls and Permissions Management: Beyond just discovery, Obsidian assesses the security posture of your SaaS applications and identifies risky configurations and overly permissive access. For instance, it can pinpoint third-party applications with excessive access to your Microsoft 365 (including OneDrive) or Google Workspace data. This allows you to:

- Enforce Least Privilege: Identify and revoke unnecessary permissions granted by users to AI apps.

- Monitor Integrations: Gain visibility if ChatGPT and other GenAI services are connected to core cloud applications like Microsoft or Google Workspace.

- Automate Remediation: Streamline the process of securing misconfigured settings and restricting risky integrations.

- Behavioral Monitoring and Threat Detection: Obsidian continuously monitors user behavior and data access patterns within SaaS environments to detect anomalous activities indicative of data exfiltration or policy violations related to shadow AI.

By providing unparalleled visibility into SaaS applications and their connections, Obsidian Security empowers security teams to proactively manage the risks associated with new, powerful AI capabilities like ChatGPT's meeting recording and cloud connectors.

Get Started: Detect Shadow AI Apps for Free

When left unmanaged, shadow AI risks across your organization become direct conduits for data leaks, regulatory violations, and an ever-expanding attack surface that traditional defenses simply can't see.

A proactive and strategic approach to managing shadow AI isn't just beneficial—it's essential. By prioritizing strong governance policies, enforcing robust access controls, and empowering employees with education on responsible AI usage, organizations can confidently balance innovation with data integrity and organizational resilience.

Discover every GenAI app in your enterprise with Obsidian Security. Get started for free today!