Block leaks, not AI. Stop sensitive data going to AI apps and browser extensions without slowing business productivity.

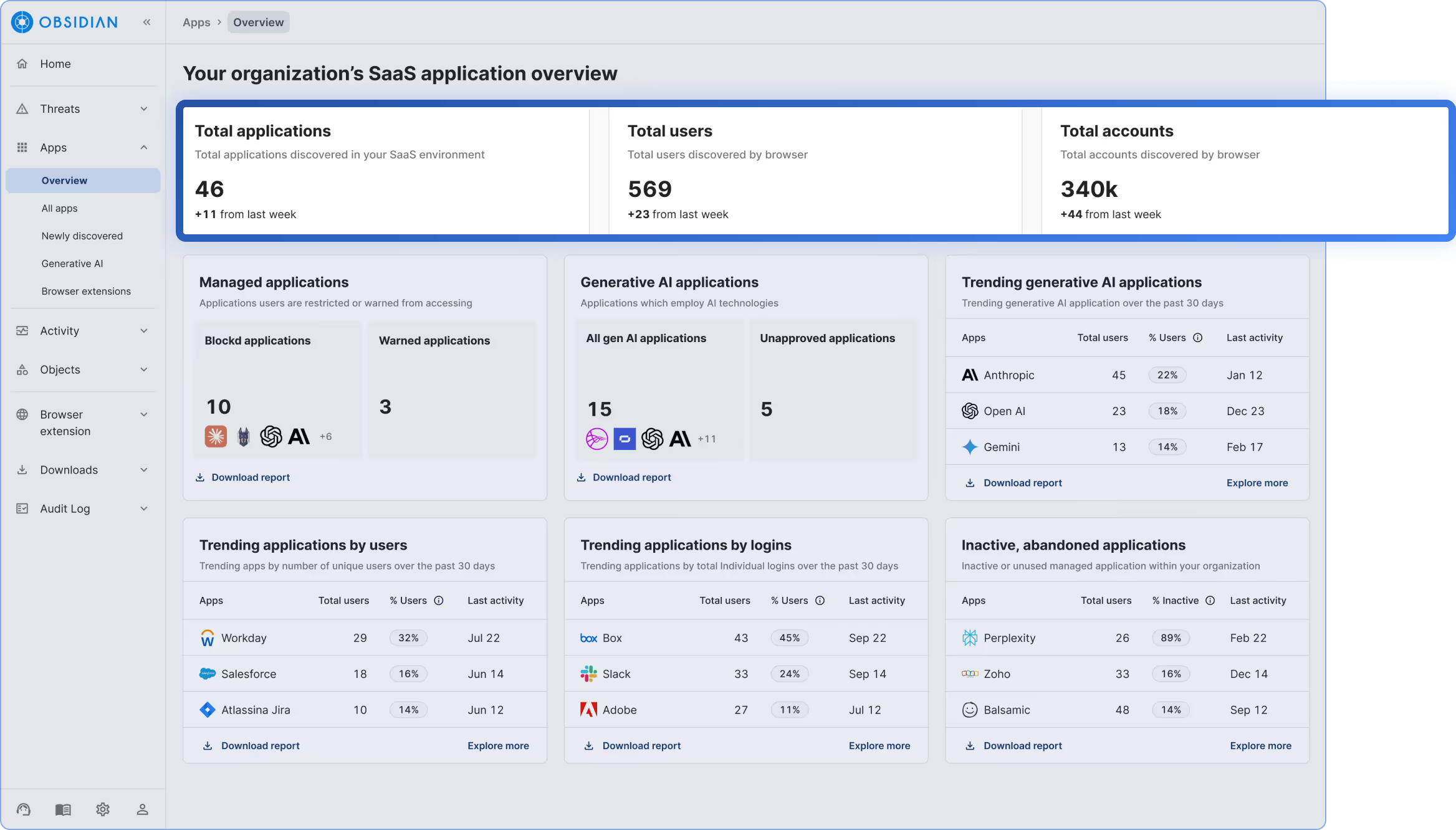

Get full visibility into every AI application across your environment with continuous discovery and classification. Track utilization for every AI-powered app, browser extension, and hidden integration.

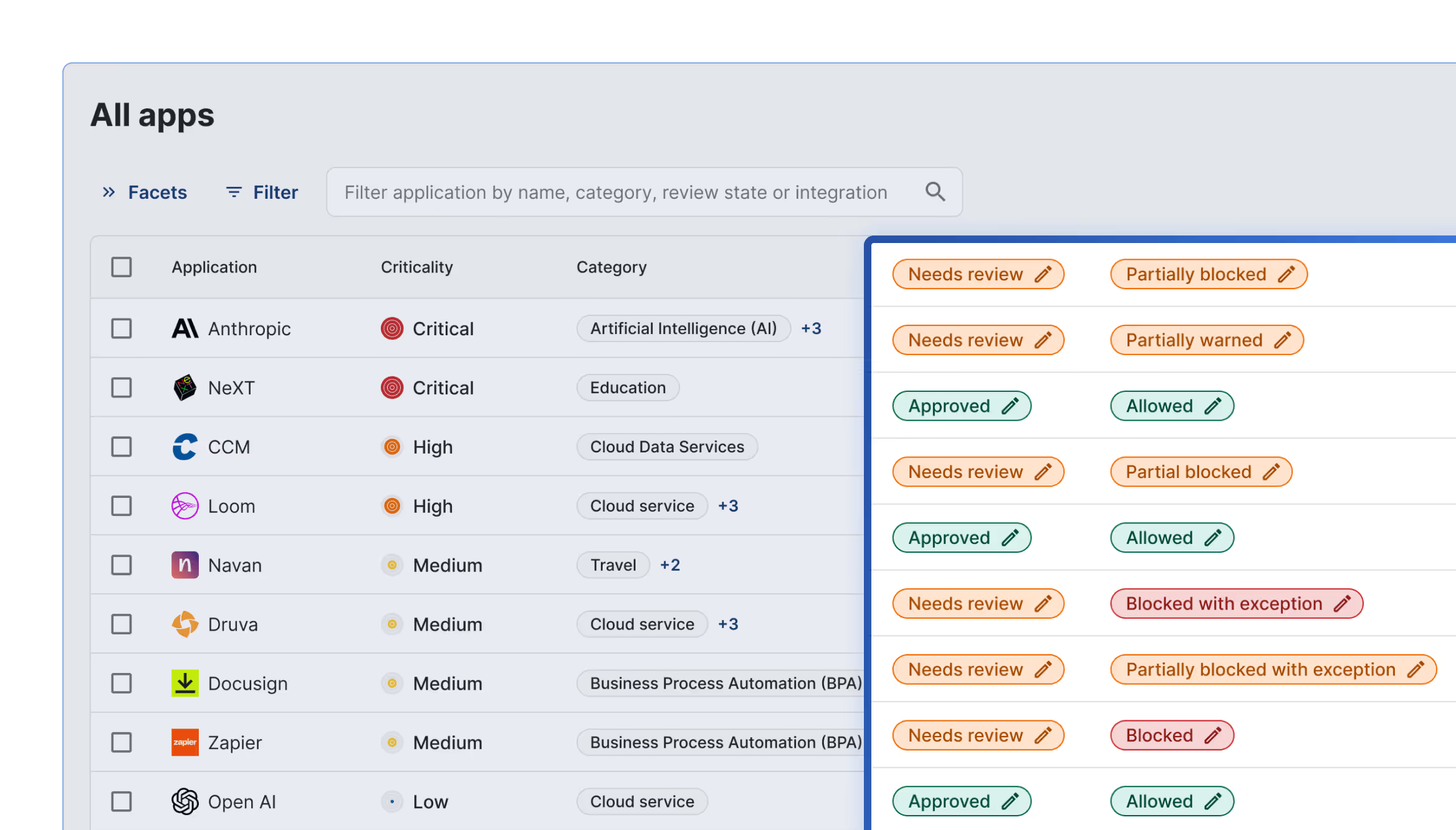

Understand and control users, activity, and risks in one unified view. Detailed login reports let you monitor access, investigate anomalies, and enforce policy to accelerate safe AI adoption across teams.

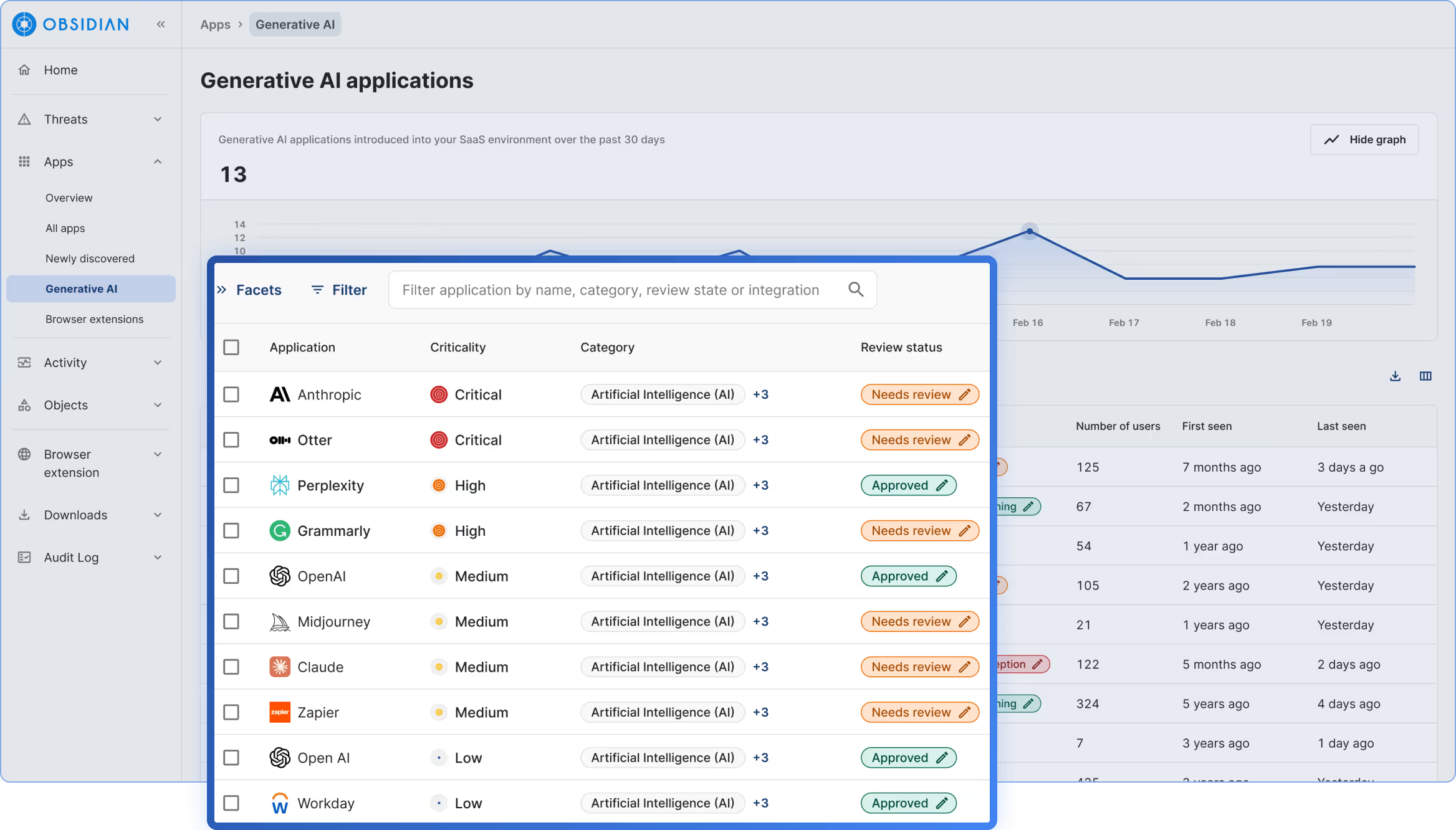

Allow access to only trusted GenAI apps to protect your organization’s sensitive data. Restrict access to unauthorized, high-risk third-party models, ensuring users only embrace sanctioned AI tools.

Enable secure AI access to data by evaluating integrations between AI and SaaS apps to enforce least privilege, reduce overpermissions, and prevent insider threats by blocking unauthorized LLM access to sensitive corporate data.

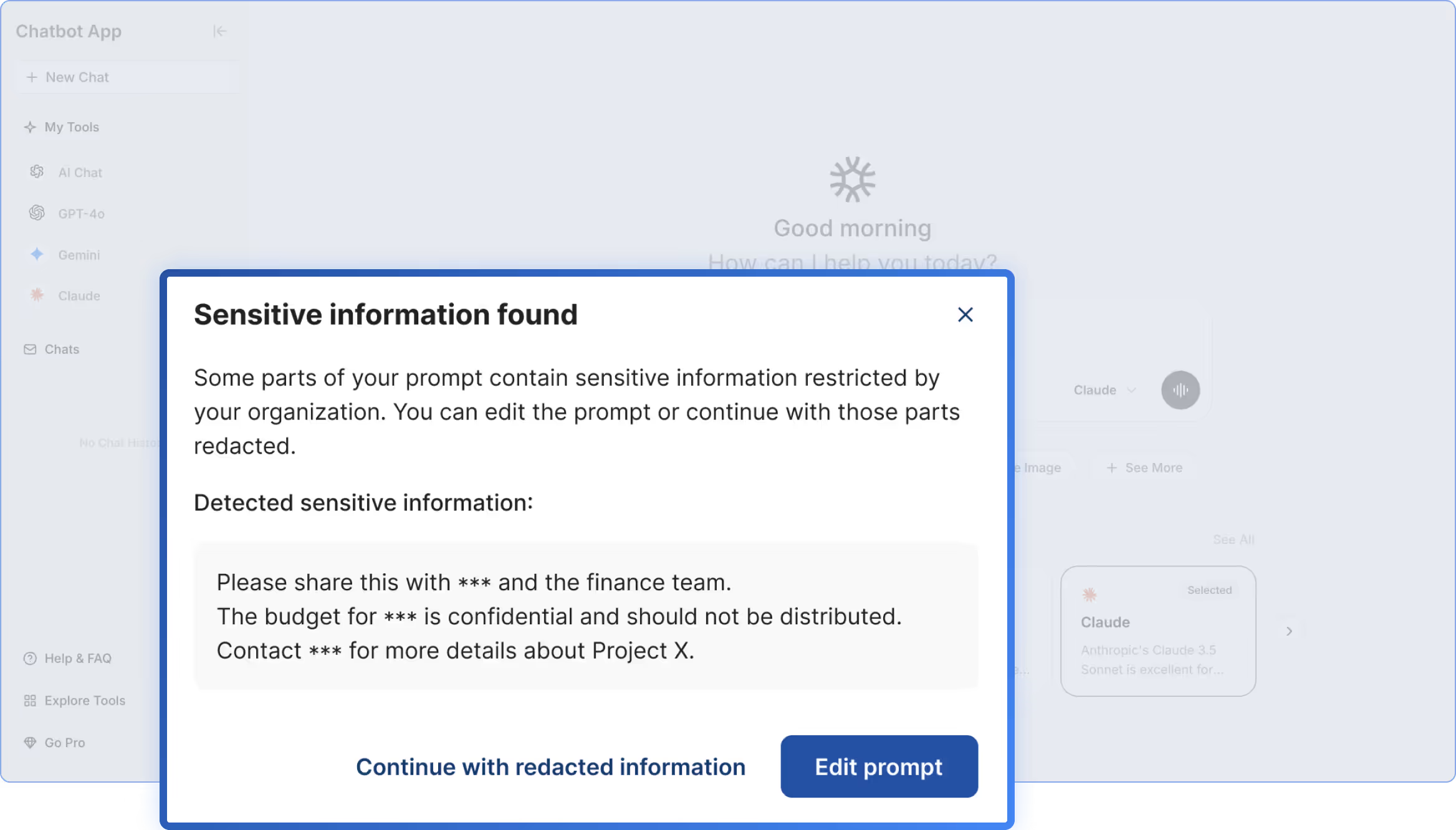

Ensure safe AI prompting by prohibiting users from inputting classified data or documents into GenAI chatbot prompts based on custom keyword recognition or data typesets.