GenAI use is exposing sensitive data. Get visibility and control—without blocking productivity.

Obsidian Security helps organizations detect and minimize GenAI risks, enabling safe and responsible use across the business.

Discover, control, and secure GenAI usage from deployment through the prompt level, across your entire enterprise.

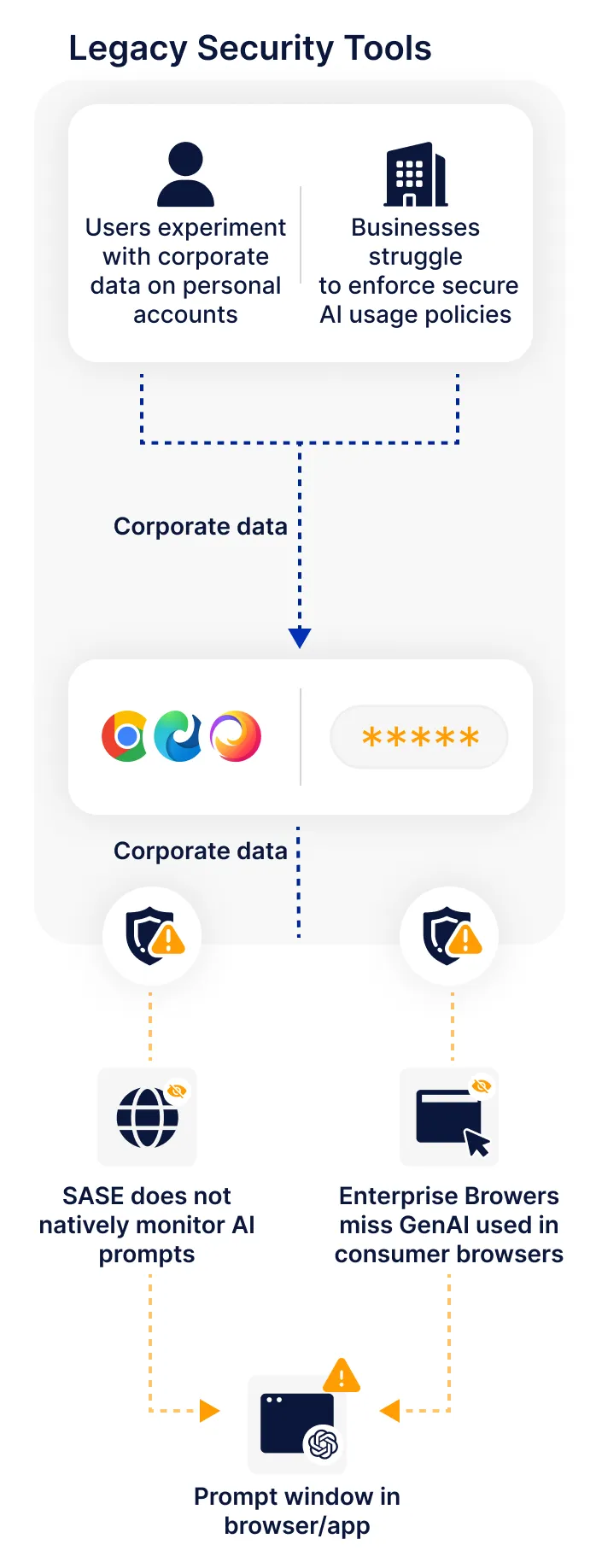

Get full visibility into every AI application across your environment with continuous discovery and classification.

Restrict access to only approved GenAI applications by prohibiting unsanctioned use and managing users, activity, and risks in one unified view.

Protect your sensitive data from leaving the organization by redacting prompts containing restricted information and controlling GenAI integration permissions.